What is the CERN Controls Timing System ?

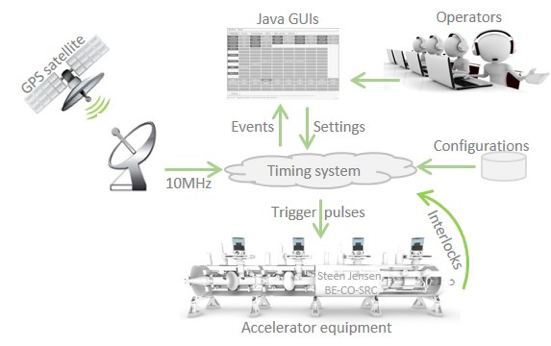

The Timing system ensures that CERN’s accelerator complex behaves as expected as a function of time. It calculates and broadcasts events based on GPS clocks, Operator input, database configurations and accelerator interlocks. It is a distributed system in constant change, resulting from close collaboration with operators, physicists and equipment experts and involving both hardware and software components.

CERN continuously delivers particle beams to a range of physics experiments (end-users), each posing strict, detailed requirements with respect to the physical characteristics of the particle beam to be delivered. Hence, particle beams traverse a number of accelerators while being manipulated in various ways.

For this to happen, the accelerators repeatedly “play” pre-defined cycles which usually consist of an injection-acceleration-ejection sequence. This in turn involves many concurrent beam manipulations including particle production, bunching, cooling, steering, acceleration and beam transfer – all of which must occur at precise moments in time, often with microsecond or even nanosecond precision.

The beam transfer between accelerators demands a tightly synchronized “rendez-vous” process between adjacent accelerators in order to temporarily align their RF systems to allow transferring the beam from one to the other.

As it is important to make optimal use of the expensive installations at CERN, the entire accelerator complex dynamically changes cycling configuration every few seconds in order to minimize idle time and optimize the delivery of particle beams to the different end-users. The changes involve reading, processing and writing thousands of values from and to accelerator components – all at the right moments in time.

The Timing system enables this by generating events which orchestrate all these activities in real time, ensuring that:

- End-user beam requests are honoured according to pre-defined priorities.

- Operator input (via Java GUIs) is continuously taken into account.

- Certain (hardwired) accelerator events and conditions are detected and handled.

- Each accelerator correctly plays the required cycles.

- Beams can be transferred from one accelerator to the next.

- Accelerator equipment receives trigger pulses.

- Low-level software receives interrupts.

How is it made ?

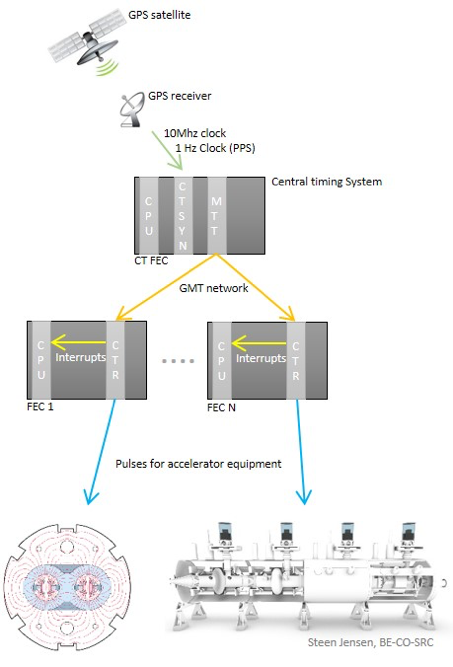

The Timing system is a distributed, hard real-time system consisting of two domains: “Central Timing” (CT) and “Local Timing” (LT).

Simplistically, CT’s calculate and publish “General Machine Timing” (GMT) events, whereas LT’s receive them in order to generate derived (localized) events in the form of CPU interrupts and physical pulses for the accelerator hardware.

Each accelerator is associated with a dedicated CT, which is designed to make the accelerator behave as required by the accelerator physicists.

The CT consists of a dedicated computer containing a CPU and a number of in-house developed electronics modules (hardware modules).

The CT CPU runs software communicating with the CT hardware modules, handling external inputs and calculating events accordingly before publishing them onto a dedicated, cabled network.

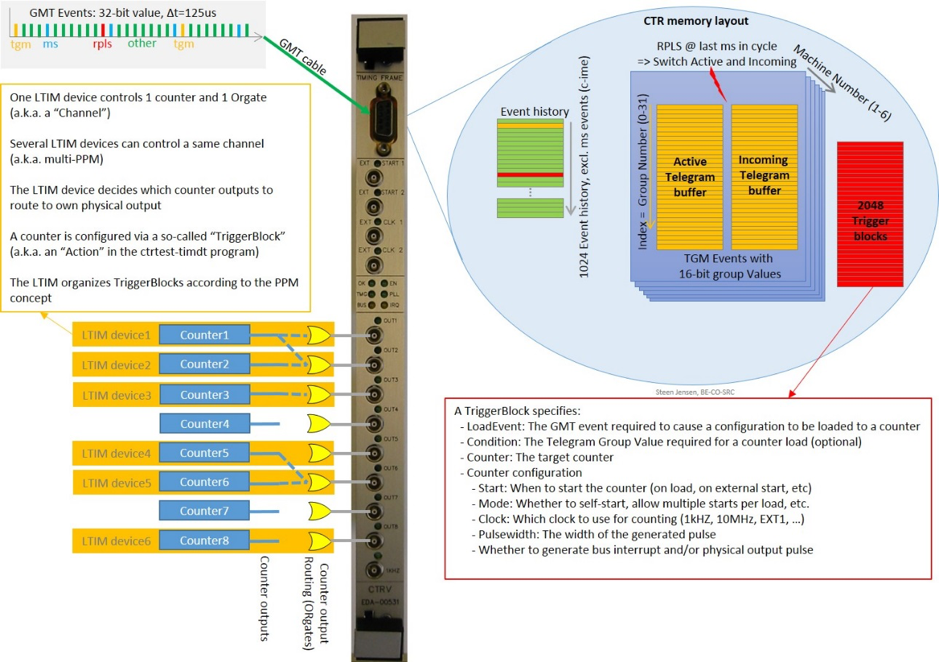

The published GMT events are received by other custom hardware modules, a.k.a. “Central Timing Receivers” (CTR’s) hosted in “Front End Computers” (FEC’s).

Using the “local timing” software, i.e. by creating instances of the so-called “LTIM” class (written in C++), the CTR modules can be configured to generate CPU interrupts and/or physical pulses derived from the arrival of particular GMT events.

Such local timings can form complex dependency trees, for instance in order to generate a burst of pulse only if a range of conditions are met.